Paul Linsay contributes the following:

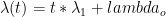

Using Landsea’s data from here, plus counts of 15 and 5 hurricanes in 2005 and 2006 respectively, I plotted up the yearly North Atlantic hurricane counts from 1945 to 2004 and added error bars equal to as is appropriate for counting statistics.

The result is in Figure 1.

Figure 1. Annual hurricane counts with statistical errors indicated by the red bars. The dashed line is the average number of hurricanes per year, 6.1.

There is no obvious long term trend anywhere in the plot. There is enough noise that a lot of very different curves could be well fit to this data, especially data as noisy as the SST data.

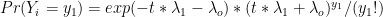

I next histogrammed the counts and overlaid it with a Poisson distribution computed with an average of 6.1 hurricanes per year. The Poisson distribution was multiplied by 63, the number of data points so that its area would match the area of the histogrammed data. The results are shown in Figure 2. The Poisson distribution is an excellent match to the hurricane distribution given the very small number of data points available. I should also point out that I did no fitting to get this result.

Figure 2. Histogram of the annual hurricane counts (red line) overlaid with a Poisson distribution (blue line) with an average of 6.1 hurricanes per year.

I conclude from these two plots that

- The annual hurricane counts from 1945 through 2006 are 100% compatible with a random Poisson process with a mean of 6.1 hurricanes per year. The trends and groupings seen in Figure 1 are due to random fluctuations and nothing more.

- The trend in Judith Curry’s plot at the top of this thread is a spurious result of the 11 year moving average, an edge effect, and some random upward (barely one standard deviation) fluctuations following 1998.

188 Comments

Solow and Moore 2000, cited by Roger Pielke, also fitted a Poisson model to hurricane data and concluded that there was no trend to the hurricane data that then had accrued. The 2005 hurricane does appear loud to me in Poisson terms but then so would be 1933 and 1886, so the process may be a bit long-tailed.

Steve, nice job!

I question the meaning of “error bars” in the first figure. Ignoring the undercount issues, we know the values exactly. The second graph makes the point that the data could have come from a Poisson population.

Steve: TAC, you mean, “nice job, Paul “

Not very familiar with this, any reference for layman?

#3 — The error bars in Figure 1 assume a completely random process. That would be the null assumption (no deterministic drivers). The Poisson plot shows that the system has a driver, but is a random process within the bounds determined by the driver.

It’s a lovely result, Paul, congratulations. You must have laughed with delight when you saw that correlation spontaneously emerge, and with no fitting at all. That feeling is the true reward of doing science. I expect Steve M. has experienced that, too, now.

In a strategic sense, your result, Paul, shows that a large fraction of the population of climatologists have a pre-determined mental paradigm, namely AGW, and are looking for trends confirming that paradigm. They have gravitated toward analyses — an 11-year smoothing that produces autocorrelation, for example — that produce likely trends in the data. These are getting published by editors who also accept the paradigm and so accept unquestioningly as correct the analyses that support it. Ralph Ciccerone’s recent shameful accomodation of Hansen’s splice at PNAS is an especially obvious example of that. These are otherwise good scientists who have decided they know the answer without actually (objectively) knowing, and end up enforcing only their personal certainties.

Honestly, your result deserves a letter to the same journal where Emanuel published his trendy (in both senses) hurricane analysis. Why not write it up? It’s clearly going to take outside analysts to bring analytical modesty back to the field. Being shown wrong is one thing in science. Being shown foolishly wrong is quite another.

Actually, now that I think about it, does the pre-1945 count produce a Poisson distribution with a different median? If so, you could show that, and then include Margo’s correction of the pre-1945 count, add the corrected count to your data set and see if the Poisson relationship extends over the whole set. Co-publish with Margo. It will set the whole field on its ear. 🙂 Plus, you’ll have a really great time.

#4 “Emanuel” — that should have been Holland and Webster (Phil. Trans. Roy. Soc. A) — but then, your result deserves a wider readership than that.

Nice job, Paul!

re #4:

For that, one could use, e.g., the test I referred here. Since people seem to have both interest and time (unfortunately I’m lacking both right now), just a small hint 😉 :

I think there was the SST data available somewhere here. Additionally R users look here:

http://sekhon.berkeley.edu/stats/html/glm.html

and Matlab users here:

http://www.mathworks.com/products/statistics/demos.html?file=/products/demos/shipping/stats/glmdemo.html

Too funny! I was just reading Jean S’s suggestion on the other thread. My precise thought was: I should correct my analysis for poisson distributions! 🙂

Paul, ha, like yesterday you beat me to it again. I plotted the data from Judith Curry’s link from yesterday and it is not quite such a good fit to a Poisson distribution as the graph you show above, but pretty good. To look at the correlation to a Poisson distribution I plot the fractional probability from the hurricanes per year (the number of years out of 63 that have a particular number of hurricanes in it divided by the total 63 years) against the probability for that particular number of hurricanes from a Poisson distribution with that mean value. Then a perfect correlation would be a straight line of gradient 1. An analysis of the error bars on that graph shows a good correlation to a Poisson distribution with R2~0.7 but the error bars are so large (a consequence of the small numbers) that it very difficult to rule anything in or out.

#2 TAC, the words ‘error bars’ here are not ‘errors’ in the sense of measurement errors. The assumption here is that the number of hurricanes is the actual true number with no undercounting or any other artifacts distorting the data (as if you had god-like powers and could count every one infallibly), but if you have a stoichastic process (something that is generated randomly) then just because you have 5 hurricanes in one year, then even if in the next year all the physical parameters are exactly the same, you may get 3, or 8. Observed over many years you will get a spread of numbers of hurricanes per year with a certain standard deviation. That perfectly natural spread is what has been referred to as the ‘error bar’. It is a property of a Poisson distribution that if you have N discrete counts of something, then the standard deviation of the distribution is equal to the square root of N. Try Poisson distribution on Wikipedia. As N becomes larger and larger, the asymmetry reduces and it looks much more like a normal distribution. But where you have a small number of discrete ‘things’ – as here with small numbers of hurricanes each year, then the distribution is asymmetrial (because you can’t have less than 0 storms per year).

I would say that the excellent fit to a Poisson distribution shows that hurricanes are essentially randomly produced and what is more, the small number of hurricanes per year (in a statistical sense) makes determining trends statistically nonsense unless you have vastly more data (vastly more years).

As I noted in the other thread yesterday, concluding that hurricanes are randomly produced does NOT preclude a correlation with SST, AGW, the Stock Market, marriages in the Church of England or anything else. If there is a correlation with SST over time, for the sake of argument, what you would see is a Poisson distribution in later years with a higher average than the earlier years. What always seemed to have been omitted in these arguments previously like in the moving 11 year average by Judith Curry is that the error bars (natural limitations on confidence) are very large. How certain you can be about whether that average really has gone up or not, is almost non-existant on this data. You might suspect a trend but can’t on these numbers show a significant increase with any level of confidence.

By the same token, Landsea’s correction is way down in the noise.

#3 Steve and Paul, I apologize for mixing up your names. Oops!

#4 Re error bars: By convention these are used to indicate uncertainty corresponding to a plotted point. In this case, there is no uncertainty (again, of course, ignoring the fact that there is uncertainty because of the undercount!). Thus the error bars should be omitted from the first figure.

Going out on a limb, I venture to say that the error bars were computed to show something else: That each observation is individually “consistent with” the assumption of a Poisson df (perhaps with lambda equal to about 6). Anyway, it appears that each error bar is computed based only on a single datapoint (N=1). This procedure results in 62 distinct interval estimates of lambda. However, it is not clear at all why we would want these 62 estimates. The null hypothesis is that the data are iid Poison, so we can safely use all the data to come up with the “best” single estimate of lambda and then test H0 by considering how likely it is that we would have observed the 62 observations if H0 is true (e.g. with a Kolmogorov-Smirnov test).

Finally, I agree with you that it is a “lovely result,” as you say 🙂

Argh, sorry TAC, just realised I misread the comment numbers and should have addressed my comments on Poisson statistics to UC in #3, not you.

There was a link to hurricane data that was inadvertantly dropped.

#3. UC, The best I can do for you is “Radiation Detection and Measurement, 2nd ed”, G. F. Knoll, John Wiley, 1989. Chapter 3 is a good discussion of counting statistics. Suppose you have a single measurement of N counts and assume a Poisson process. What is the best estimate of the mean? N. What is the best estimate of the variance? N.

#4, Pat

How does it do that?

The analyses all around here at CA on TC frequencies and intensities have been most revealing to me, but the results have not been all that surprising once I understood that the potentional for cherry picking (and along with the evidence of how poorly the picking process is understood by those doing it) was significantly greater than I would have initially imagined. What will be more revealing to me will be the reactions to these analyses.

The analyses say be very careful how you use the data, but I fear the inclination is, as Pat Frank indicates, i.e. here is what we suspect is true and here is the analysis from these selected data to substantiate it.

#9 TAC (really this time), I have to disagree with you about the ‘error bars’ on the graph, they shoudn’t be omitted, they are vital. There may be no uncertainty in the measured number of storms in a year but what should be plotted is ‘confidence limit’ – error bar is perhaps a loaded term. If you could run the year over and over again then you would get a spread of values, that is the meaning of the uncertainty or ‘error bar’ plotted in the figure 1.

#13 IL, do you agree that the same value of the standard deviation should apply to every observation? If not — and the graph clearly indicates that it does not — could you explain to me why?

There is a scientific response to this: “Ack!”

The RealClimate response: “*sigh* so how much was Paul Linsay paid through proxies some laundered money from Exxon-Mobil to confuse the public with regard to the scientific consensus on global warming?”

My response: “Holy crap, we’ve been trying to predict a random process all of this time. Someone should call Hansen and Trenberth and recommend a ouija board for their next forecast – unless they’re already using one”

#14, no, because the count in each year is an independent measurement and the years are not necessarily measuring the same thing. It is conceivable for example that a later year has been affected by a positive correlation with SST (or any other mechanism) so the two periods would not have the same average. If you have measured a count of N then the variance of that in Poisson statistics is N. If you hypothesize that a number of years are all the same so that you add all the counts together then you have a larger value of N but the fractional uncertainty is lower since it is the standard deviation divided by the value N but since the standard deviation is the square root of N then fractional uncertainty is (root N)/N = 1/(root N) and thus as N increases, the relative uncertainty decreases.

If you treat each year as separate then I believe that what Paul has done in figure 1 is correct.

John A – just because it is a random process does not mean that there cannot be a correlation with

#8

Its quite reasonable to look to see if there is a correlation with time, SST or whatever. I have no problems with that. What I do think though is that the statistics of these small numbers make the uncertainties huge so that the amount of data you need to be able to confidently say that there is a real trend is far larger than is available. You would need many years’ more data to reduce the uncertainties – or if there was a real underlying correlation with SST – or whatever – it would have to be much more pronounced to stick up above the natural scatter.

Il,

Well, under the null hypothesis the “years” are measuring the same thing. Each one is an iid variate from the same population. The variates (not the observations) have the same mean, variance, skew, kurtosis, etc. Honest!

I’ll try to find a good reference on this and post it.

Elsner is using Poisson regression for Hurricane prediction:

Elsner & Jagger: Prediction models for annual U.S. hurricane counts, Journal of Climate, v19, 2935-2952, June 2006.

Click to access ElsnerJagger2006a.pdf

He has some other interesting looking publications here:

http://garnet.fsu.edu/~jelsner/www/research.html

and a (recently updated) blog here:

http://hurricaneclimate.blogspot.com/

Re 17:

IL, thanks for making me laugh. We’re now generating our own statistics-based humor on this blog.

Re #11:

Paul, what happens if you apply the same analysis to the global hurricane data? Now I’m curious. Because if the global data follows the same Poisson distribution then we’re looking at an even bigger delusion in climate science than temperatures in tree-rings.

What type of curve would arise with a Poisson constant gradually rising , for instance from alpha=5 in 1950 to alpha=7 in 2000 ? Would that curve be distinguishable from a Poisson graph with intermediate alpha, given the coarseness resulting from the fact that N = 63 only?

#20 John A – glad I have some positive effect

#18 TAC, No, I don’t think so (although I am always aware of my own fallibilities and am willing to be educated).

I think I understand the point you are making but I am not sure it is correct here in the way you imply. There are a lot of examples given in http://en.wikibooks.org/wiki/Statistics:Distributions/Poisson

one example is going for a walk and finding pennies in the street. Suppose I go for the same walk each day. Many days I find 0 pennies, a few days I find 1, a few days I find 2 etc

I can average the number of pennies per day and come up with a mean value that tells me something about the population of pennies ‘out there’ and it will follow a Poisson distribution. If I walk for many more days I can be more and more confident of the mean value (assuming the rate of my neighbours losing pennies is constant) but I then cannot say anything about whether there is any trend with time – eg are my neighbours are being more careless with their small change as time goes by? In order to test whether there is some trend with time I need to look at each individual observation and treat that as the mean value which is what Paul did in the original graph. Yes, if I assume that there is some constant rate of my neighbours losing pennies, some of which I find, I can look at the total counts and I can then get a standard deviation but I would then not have 63 data points all with the same ‘error bar’, I would have one data point with the ‘error bar’ in the time axis spanning 63 years.

Yes, you can look at (for the sake of argument) pre 1945 hurricane numbers and post 1945 hurricanes and get a mean and standard deviation from Poisson statistics and infer whether there has been any change between those two periods with some sort of confidence limit but then you only have 2 data points.

I would like to announce my official “John A Atlantic Hurricane Prediction” for 2007.

After extensive modelling of all of the variables inside of a computer model costing millions of dollars (courtesy of the US taxpayer) and staffed by a team of PhD scientists and computer programmers, I can announce:

Hey everybody.

Speaking of “error bars”, what do you actually mean by that? Is it a definite limit of values which is

acceptable as long as they stay within some given boundaries, or is it some definite “borderline” whose going beyond

will cancel the veracity, or whatever you might call it, of your calculations?

In Danish I think we call it “margin of error”, but I am not sure you mean the same by “error bars” so

will somebody please inform me!

It makes it easier for me as layman to follow your discussion on this thread.

Thank you.

HK

#22 Il, I think I understand the purpose that the “bars” in the first graphic were intended to serve. My concern had to do with whether use of bars for this purpose deviates from convention.

I spent some time looking on the web, expecting to find a clear statement on error bars from either Tukey or Tufte. Unfortuntately, such a statement does not seem to exist.

I did find one statement which can, I think, be interpreted to support your position:

However, this appears in a discussion of plotting large samples, and it seems likely that the word “population” was intended to refer to the sample, not the fitted distribution based on a sample of size N=1.

Where does that leave things? Well, I continue to believe that we should reserve error bars for the purpose of displaying uncertainty in data. For the second purpose, to show how well a dataset conforms to a specific population, there are lots of good graphical methods (I usually use side-by-side Boxplots, admittedly non-standard but easily interpreted; I’ve also seen lots of K-S plots, overlain histograms, etc.).

However, returning to error bars for the moment, perhaps the important point is already stated in the quote above: “Because of this lack of standard practice it is critical for the text or figure caption to report the meaning of the error bar.”

11, thanks for the reference, I’ll try to find it. Like I said, not very familiar with counting processes. However, let’s still write some thoughts down:

Yes, the mean of observations from Poisson process is a MVB estimator of intensity (lambda, tau=1), and variance of this estimator is lambda/n, where n is the sample size. And I guess that the mean is best estimate for the process variance as well. But I think you assume that each year we have different process, which confuses me.

How about thinking the whole set (n=60 or something) as realizations of one Poisson process, and testing whether it is a good model (i.e. Poisson process, constant lambda, estimate of lambda is 6.1). Plot this constant mean, add 95,99 % bars using Poisson distribution and plot the data to the same figure.

If Figures 1 and 2 were presented the other way around, the meaning of the “error bars” in Figure 1 could be presented more logically. From Figure 2 one can deduce that the data are from a Poisson distribution. Each annual count then is an estimate of the Poisson mean, with the one sigma confidence values on that mean as shown. The time series then can be examined to see if there is evidence of a change in the mean of the distribution.

There are three types of error here:

Sampling error Early samples were taken from land and shipping lanes leaving large areas unsampled or under sampled. The size of this error has gone down with time.

Methodological error Which includes everything from indirect methods of estimating location, winds speed and pressure to accuracy and precision of instruments. This also has gone down with time but is still non-zero.

Process error We assume that even if the “climate” doesn’t change from year to year, the number of storms will.

Any meaningful “error bars” would have to include (estimates of) the above.

I still don’t understand why the nice fit in the second plot implies the absence of a trend. Suppose that there was a very clear trend, with 1 hurricane in 1945, 2 hurricanes in 1946, … , and finally 12 hurricanes in 2005 and 15 hurricanes in 2006. Figure 2 would remain competely unaltered. So the fact that the Poisson distribution fits the histogram seems irrelevant as far as the existence of a trend is concerned. It is possible to obtain a Poisson distribution with one global rate, just by adding smaller distributions with different rates.

#26 UC: I completely agree with what you’ve written.

#29,

True, histogram doesn’t care about the order.

If google didn’t lie, 0.01 0.05 0.95 0.99 quantiles for Poisson(6) are 1 2 10 12, respectively. So, to me, the only problem with Poisson(6) model in this case are the 10 consecutive less-than-averages in 70’s.

Just checked, term ‘trend’ is not in Kendall’s ATS subject index. What are we actually looking for? IMO we should look for possible changes in the intensity parameter.

#29 James, your point is well taken. You could have a strong trend and still obey a Poisson distribution. However, it appears that is not the case here; there is no trend in the data.

Incidentally, landfalling hurricanes were considered (here), and it seemed that the data were almost too consistent with a simple Poisson process. It made me wonder what was going on.

TAC, re #9: search on “ergodicity” at CA (or “count ’em, five”. This was the subject of argument between myself and “tarbaby” Bloom. The counts are known with high (but not 100%) accuracy, but counts are not the issue; it’s the behavior of the climate system that’s the issue, and your desire to make an inference *among* years. If the climate system were to replay itself over 1950-2006, you’d get a different suite of counts. That’s the sense in which “error” is meaningful for a yearly count. This is going to sound fanciful to anyone who has not analysed time-series data from a stochastic system. However it is epistemologically and inferentially correct.

#32

What test have you used to establish that there is no trend?

A GAM fitted to these data, with Poisson variance, finds significant changes with time (p=0.027). This is only an approximate test, but a second order GLM, again with Poisson variance, is also significant (p=0.039).

#23 John A

“After extensive modelling of all of the variables inside of a computer model costing millions of dollars (courtesy of the US taxpayer) and staffed by a team of PhD scientists and computer programmers, I can announce: For 2007, the number of hurricanes forming in the Atlantic will be 6 plus or minus 3”

I’ve very disapponted that as a fellow UK taxpayer you do not appeciate the fact that inorder to justify the signifcant sums of money we spend of funding this vital (to saving the planet) climate research that your supercomputer can only calculate to one significant figure. As a concerned UK taxpayer I have taken he liberty to once more fire up my retired backofthefagpacket supercomputer (which was retired from AERE Harwell some years ago after it was no longer required to solve the Navier-Stokes equations) and based on its the results it has output my prediction (endorsed by the NERC due to its high degree of precision) is

6.234245638939393 (+/- n/a as this calculation has been peformed by a supercomputer that can calculate pi to at least 22514 ecimal places as memorised by Daniel Tammet).

As a UK tax payer I feel that it is important that such calculations must be highly precise and certainly not subject to any uncertainty. As a Church of England vicar I also appreciate that my mortality has already been determined (something which sadly people like Yule did not understand). I do confess however to be puzzled as to why inflation appears to have remained relatively constant and low since 1997 yet as a result of AGW it is now much rainier in the UK?

KevinUK

#33 Bender, I’m not sure I understand your point. FWIW, I have a bit of familiarity with time series. However, the question here has to do with graphical display of information, and specifically the use of error bars. At the risk of repeating myself, where the plotted points are known without error, by convention (i.e., what I was taught, but it does seem to be accepted by the overwhelming majority of practitioners) one does not employ error bars.

Of course I understand your point about ergodicity. I agree there is a perfectly appropriate question about how the observations correspond to the hypothesized stochastic process, and clearly the variance of the process plays a role. As I think we both know, there are plenty of graphical techniques for communicating this information, some of which are mentioned above. But I do not see how this has anything to do with how one plots original data.

It is ironic that this debate about proper graphics is occurring in the context of a debate about uncertainty in hurricane count data. For example, I thought Willis (here) presented an elegant way to display the uncertainty of the hurricane count data using both error bars and semicircles. That’s what error bars are for: to communicate the uncertainty in the data (which could be measured values, model results, or whatever). Climate scientists need to get used to thinking this way, and, as with other statistical activities, it is important to employ consistent and defensible methods.

In a nutshell, plotting the 2005 hurricane count as 15 +/- 3.8 suggests that there might have been 18 hurricanes in 2005. That’s simply wrong. Said differently, the probability of an 18 in 2005 is zero; the number was 15. That number will never change (unless…). Data are data, data come first, and the properties of the data, including uncertainty, do not depend on the characteristics of some subsequently hypothesized stochastic process (at least in the classical world, where I spend most of my time).

Finally, to be clear: I am raising an issue of graphical presentation. If the graphics were done differently — UC had it right in #26 — there would not be a problem. The problem with Figure 1 is that it overloads “error bars” in a way that’s bound to cause confusion.

That’s my $0.02.

#36. TAC, that makes sense to me as well.

#34 RichardT, I may have made a mistake in keying the data, but here are my results showing no significant trend:

% Year

[1] 1944 1945 1946 1947 1948 1949 1950 1951 1952 1953 1954 1955 1956 1957 1958 1959 1960 1961 1962 1963

[21] 1964 1965 1966 1967 1968 1969 1970 1971 1972 1973 1974 1975 1976 1977 1978 1979 1980 1981 1982 1983

[41] 1984 1985 1986 1987 1988 1989 1990 1991 1992 1993 1994 1995 1996 1997 1998 1999 2000 2001 2002 2003

[61] 2004 2005 2006

% Hc

[1] 7 5 3 5 6 7 11 8 6 6 8 9 4 3 7 7 4 8 3 7 6 4 7 6 4 12 5 6 3 4 4 6 6

[34] 5 5 5 9 7 2 3 5 7 4 3 5 7 8 4 4 4 3 11 9 3 10 8 8 9 4 7 9 15 5

% cor.test(Year,Hc,method="pearson")

Pearson's product-moment correlation

data: Year and Hc

t = 0.9513, df = 61, p-value = 0.3452

alternative hypothesis: true correlation is not equal to 0

95 percent confidence interval:

-0.1307706 0.3579536

sample estimates:

cor

0.1209120

% cor.test(Year,Hc,method="kendall")

Kendall's rank correlation tau

data: Year and Hc

z = 0.3654, p-value = 0.7148

alternative hypothesis: true tau is not equal to 0

sample estimates:

tau

0.03313820

% cor.test(Year,Hc,method="spearman")

Spearman's rank correlation rho

data: Year and Hc

S = 38969.32, p-value = 0.6145

alternative hypothesis: true rho is not equal to 0

sample estimates:

rho

0.06467643

Warning message:

Cannot compute exact p-values with ties in: cor.test.default(Year, Hc, method = "spearman")

%

#36 & 37

What are the errors for the time series of hurricane counts? Suppose you could re-run 1945-2006 over and over like in Groundhog Day. The number of hurricanes observed each year would change from repetition to repetition. You could then take averages and get a good measurement of the mean and variance of the number of hurricanes in each year. But you can’t. So the best you can do is assume each year’s count is drawn from a Poisson process with mean and variance equal to the number of observed hurricanes.

The same applies to the histogram. The error on the height of each bin is given by Poisson statistics too.

If you fit a function to a series of counts these are the errors that should be used in the fit.

This has been standard practice in nuclear physics and its descendents for close to 100 years. As an example here’s a link from a nuclear engineering department. Notice the statement that “Counts should always be reported as A +- a.”

Re 36:

… where the plotted points are known without error, by convention (i.e., what I was taught, but it does seem to be accepted by the overwhelming majority of practitioners) one does not employ error bars.

The plotted points are not known without error.

Observing Methods

As has been discussed here before.

#39 Paul, thanks for the link to the interesting document. You have to read it pretty carefully, and it deals with a slightly different problem (estimating the true mean, a parameter) but it does shed a bit of light on the topic. One particular thing to note: It specifies estimating sigma as the square root of the true mean, m, not the count, n. Thus, following the document’s prescription, all of the error bars in figure 1 should be exactly the same length, as implied by UC (#26) and also in #14. However, if you try to apply this to the hurricane dataset — for a small value of lambda where some of the observed counts are less than the standard deviation — you’ll find it doesn’t work very well (some of your observations will have error bars that go negative, for example).

Does this clear anything up or just create more confusion?

Let me try a different approach: In 2005, we agree there were n=15 hurricanes. We also agree that the expected count (assuming iid Poisson) was approximately m=lambda=6.1, and therefore the standard deviation of the expected count is approximately 2.5. So here’s the question: Is the n=15 a datapoint or an estimator for lambda? I expect your answer will be “both” — but I’m not sure.

Anyway, I’d be interested in your response.

#40 James, I concede the point. However, in the interest of resolving the issue about error bars, can we, just for the moment, pretend that things are measured without error? Thanks! 😉

#41. A question – hurricanes are defined here as counts with a wind speed GT 65 knots. There’s nothing physical about 65 knots. Counts based on other cutoffs have different distributions – for example, cat4 hurricanes don’t have a Poisson distribution, but more like a negative exponential or some other tail distribution. Hurricanes are a subset of cyclones, which in turn are a subset of something else. If hurricanes have a Poisson distribution, can cyclones also have a Poisson distribution? I would have thought that if cyclones had a Poisson distribution, then hurricanes would have a tail distribution. Or if hurricanes have a Poisson distribution, then what distribution would cyclones have. Just wondering – there’s probably a very simple answer.

re: #43

I think it’d be similar to a pass-fail class. You might set an arbitrary value for pass on each individual test during the semester and then you could then give a number of tests, some harder and some easier, randomly. You’d have a Poisson distribution, possibly. But you might also, on adding up the various test results for each individual in the class decide you want to set things for 85% of students passing and this could be set at whatever value gives you that ratio. This could be regarded as a physical result while the arbitrary value for passing an individual test would not be.

As for cyclones, they’d be everything which passes the “cyclone” test. There’d be a finite number of such cyclones each season, so they should distribute just like hurricanes do. Just with larger numbers. I think someone here was saying that as the numbers get larger, the curve gets more like a normal distribution, which makes sense, so I’d expect the distribution of cyclones to be more normal than the distribution of hurricanes. This being the case, it would seen that the distribution of a tail is simply a poisson distribution with the pool of “passing” candidates starting where the tail was chopped off.

Re #42

Wait a sec, TAC. Don’t concede too much here. Measurement error and sampling error are different things. This issue is all about sampling error. [Linsay’s #39 clarifies my ergodicity argument.]

The hypothesis we want to test is whether the observed counts are likely to be drawn from a rondom poission process (or truncated possoin, whatever) with fixed mean = fixed variance. Each year is a random sample from a stochastic process. Alternative hypothesis: there is a trend in the mean. Paul Linsay has plotted the variance around each year’s observation as though that observation *were* that year’s mean. That’s wrong, and that’s why your complaint about the difference count-variance dropping below zero for low counts is valid.

The reason it’s wrong is that the null hypothesis is that there is only one fixed mean. If any obs fall outside the interval, such as 2005, there’s a chance we’re wrong. If, further, the proportion of observations falling outside the 95% confidence interval increases with time, then there’s a trend and the mean is not fixed.

All this assumes that the variance is constant with time. But there is no reason this must be true. If the process is nonstationary, it is not ergodic. Then inferences about trends starts to get dicey. That’s where the statistical approach breaks down and the physical models start to play a role.

re: 44 cyclones … and by extension, tornados exhibit a poisson distribution? In an AGW theory of everything, shouldn’t f3-f5’s be increasing in frequency? Or is it because they do not tap directly into the catastrophic global increase of SSTs?

SINUSOIDAL POISSON DISTRIBUTION

Paul, a very interesting post. I disagree, however, when you say:

When I looked at the distribution, it reminded me of a kind of distribution I have seen before in sea surface temperatures, which has two peaks instead of one. I’ve been investigating the properties of this kind of distribution, which seems to be a combination of a classical distribution (e.g. Poisson, Gaussian) with what (because I don’t know the name for it) I call a “sinusoidal distribution.” (This is one of the joys of not knowing a whole lot about a subject, that I can discover things. They’ve probably been discovered before … but I can come at them without preconception, and get the joy of discovery.)

A sinusoidal distribution arises when there is a sinusoidal component in a stochastic process. The underlying sinusoidal distribution is the distribution of the y-values (equal to sin(x)) in a cycle. The distribution is given by d(arcsin(x))/dt. This is equal to 1/sqrt(1-x^2), where x varies from -1 to 1. The distribution looks like this:

As you can see, the sine wave spends most of its time at the extremes, and very little time in the middle of the distribution. Note the “U” shape of the distribution. I looked at combinations of the sinusoidal with the poisson distribution. Here is a typical example:

Next, here is the distribution of the detrended cyclone count, 1851-2006.

Note the “U-shape” of the peak of the histogram. Also, note that the theoretical Poisson curve is to the left of the sides of the actual data. This is another sign that we are dealing with a sinusoidal Poisson distribution, and is visible in Figure 2 at the head of this thread.

One of the curiosities of the sinusoidal distribution is that the width (between the peaks) is approximately equal to the amplitude of the sine wave. From the figure above, we can see that we are looking for an underlying sinusoidal component with an amplitude of ~ 4 cyclones peak to peak. A periodicity analysis of the detrended cyclone data indicates a strong peak at about 60 years. A fit for a sinusoidal wave shows the following:

Clearly, before doing any kind of statistical analysis of the cyclone data, it is first necessary to remove the major sinusoidal signal. Once the main sinusoidal signal is removed, the reduced dataset looks like this:

As you can see, the fit to a Poisson distribution is much better once we remove the underlying sinusoidal signal.

CONCLUSION

As Jean S. pointed out somewhere, before we set about any statistical analysis, it is crucial to first determine the underlying distribution. In this case (and perhaps in many others in climate science), the existence of an underlying sinusoidal cycle can distort the underlying distribution in a significant way. While it might be possible to determine the statistical features (mean, standard deviation, etc.) for a particular combined distribution, it seems simpler to me to remove the sinusoidal component before doing the analysis.

My best to everyone, and special thanks to Steve M. for providing this statistical wonderland wherein we can discuss these matters.

w.

TAC plus bender #45. I see we are arguing about 2 different things here, the first perhaps a little more to do with semantics, the second more substantial.

In #36 TAC argued that if the counts for 2005 were 15 then (assuming perfect recording capability) that was an exact number and so should have no error bar. He (? sorry, shouldn’t make assumptions) in #36 says that if you put 15+/-3.6 then that implies that the count could have been 18 which is wrong.

I disagree. I think those are confidence limits – estimators if you prefer. In a physics experiment if I measure something numerous times with a measurement error, what the error bar is telling me is what is the probability that if I measure it again that I will get within a certain range of that value. In a literal sense we can’t ‘run’ 2005 again but the graph as plotted by Paul Linsay is meaningful to me as the confidence limits for each count – if we were to have another year with the same physical conditions as 2005, what is the likelihood that we would get 15 storms again. To me the correct answer is 15+/-3.6 (1 sigma). Its like the example from the physics web page linked by Paul, if I measure the number of radioactive decays per minute and I record the decays per minute for an hour. After the hour I have 60 measurements each of an exact number but that does not say to me that there are no error bars on those numbers. If I have N counts in the first minute then the standard deviation (the expectation of what I might get in the second minute) is root N. If I was to plot all of those 60 measurements against time I would plot each count value with its own individual confidence limit of root N (N being the particular count in that particular minute).

Yes, if I take all the counts for the whole 60 minutes I can get a mean value for the hour. The confidence limit on that mean value will be root of all the counts in the hour (approximately 60N and the standard deviation will be root 60N). But that is not the confidence limit on an individual measurement. I could plot that mean value with its confidence limit root 60N but I would then only have a single point on the graph.

Paul says he comes from a physics background, so do I and maybe this is where we are differing with TAC, Bender etc (maybe, again don’t want to make assumptions). In #45 Bender says that it is wrong to plot each point with the variance given by the mean of that point. Sorry Bender, I disagree with you, I believe it is correct. I am looking at it from the perspective of the radioactive decay counting experiment described above. You can reduce the uncertainty by summing together different years (although your uncertainty only decreases by root of the number of years that you sum) but then you have reduced the number of data points that you have! If you have the 63 years’ worth of individual year measurements then each individual one has a standard deviation which should be plotted, they are not all the same. If you want to address hypotheses such as ‘are the counts changing with time’ then you have to address the uncertainty in each data point if you retain all 63 data points and look for a trend that is significant above that noise level. Yes, you can reduce the uncertainty by summing years but then you have a lot less data points on your graph.

No, the variance count does NOT drop below zero because this is Poisson statistics, it is asymmetrical and doesn’t go below zero.

Let’s see if I clarify or confuse:

Yes, we are testing H0: the data are samples from Poisson(lambda) process. We don’t know lambda. We need to estimate it using the data. We want to find a function of the observations (a statistic) that tells something about this unknown lambda. Now, assuming H0 is true, we have quite a lot of theory behind us telling that average of the observations (say![\hat{x}[\tex]) is the best ever estimate of lambda (MVB, for example). We also know that variance of this estimator is](https://s0.wp.com/latex.php?latex=%5Chat%7Bx%7D%5B%5Ctex%5D%29+is+the+best+ever+estimate+of+lambda+%28MVB%2C+for+example%29.+We+also+know+that+variance+of+this+estimator+is+&bg=ffffff&fg=000&s=0&c=20201002) latex \hat{x}/n[\tex], where n is the number of independent observations. You cannot find unbiased estimator that has smaller variance than this. Estimate obtained this way will be distributed more closely round the true lambda than any other estimator. And the number of observations in this case is 60 (?), so we can see that sampling variance is small (I think I could say ‘sampling error is very small’ in this context as well). You can try this with simulated Poisson processes, take 60 samples of Poisson(6), take the average, and see how often it is less than 5 or more than 7. If you take only one sample, the sampling error is much larger. This is actually shown in Figure 1 (IMO).

latex \hat{x}/n[\tex], where n is the number of independent observations. You cannot find unbiased estimator that has smaller variance than this. Estimate obtained this way will be distributed more closely round the true lambda than any other estimator. And the number of observations in this case is 60 (?), so we can see that sampling variance is small (I think I could say ‘sampling error is very small’ in this context as well). You can try this with simulated Poisson processes, take 60 samples of Poisson(6), take the average, and see how often it is less than 5 or more than 7. If you take only one sample, the sampling error is much larger. This is actually shown in Figure 1 (IMO).

Completely another business is the testing of H0. Knowing that lambda is close to 6, we can compute [0.01 0.05 0.95 0.99] quantiles from Poisson(6) – if, for example 0.99 point exceeded 10 times in the data, we can suspect that our distribution model is not OK. In addition, there should be no serial correlation in the data if H0 is true.

And, I didn’t mention measurement errors in the above. Measurement errors affect both estimate of lambda and testing of H0.

And finally, if Figure 1. bars represents sampling error, there is basically nothing wrong with it. Sampling variance is $latex \hat{x}/n[\tex], n=1. But I think that approach doesn’t bring up very powerful test for H0.

Re #47

If sinusoidal, why not “multistable”?

You see the problem? Detection & attribution. (What is the frequency of your sinusoid? Is there more than one frequency? Or maybe the process is “multistable”? What are the attracting states?) Problem specification is a mess. Stationary random Poisson is just a starting point.

The biggest reason arguing against Linsay’s Poisson is the high bias in the 1-2 counts and the low-bias in 3-4 counts. Systematic bias usually implies error in model specification. Essenbach’s cycle-removed Poisson, interestingly, removes this systematic bias.

Re #48

Unfortunately, I am correct (in terms of the inferences that are being attempted with these data, which assume that you are observing a single stochastic process). If you suppose that the mean fluctuates from year to year (hence plotting variance around each observation, not the series mean), then you can not suppose that the variance is fixed. Either you are observing one stochastic process (one mean, one variance), or you observing more than one (multiple means, multiple variances) which has been stitched together to appear as one.

Why would you ever plot a variance around an observation (as opposed to a mean)? What would that tell you?

Re #49

You clarify, at least up until the very end:

What’s right about plotting error around observations? Error is to be plotted on means; individual observations fall inside or outside those error limits.

Re #48

It’s not 15 +/- 3.6 that should be plotted, it’s 6.1 +/- 3.6. If the series is stationary then the obs. 15 should be compared to that. In which case 15 is extreme.

Essenbach shows that the mean and variance might not be stationary but sinusoidal. Now the obs 15 must be compared to the potential range calculated during 2005 to determine if it’s extreme. In which case 15 is still extreme.

around observation. Mean of one sample is the sample itself. Observe only one sample from Poisson with unknown lambda. Best estimate of the lambda is the observation itself. Sampling variance is the observation itself.

#38

The Pearson Correlation coefficient you are using to find trends assumes that the data have a Gaussian distribution. Given the discussion of the Poisson nature of the data on this page, this choice need justifying. A more appropriate test for a linear trend when the data have Poisson variance is to use a generalised linear model with a Poission error distribution. I’ve done this, and you are correct, there is no linear trend.

The absence of a linear trend does not imply that the mean is constant – there may be a more complex relationships with time. This might be sinusoidal (#47) but alternative exploratory model is a generalized additive model. A GAM finds significant changes in the mean. (This test is only appropriate)

Everybody (except #47) seems to be happy with the statement that the “annual hurricane counts from 1945 through 2006 are 100% compatible with a random Poisson process” without any goodness of fit test. Are they? Doesn’t anyone want to test this assertion?

curry#55 RichardT

This point is well taken. The Pearson version, as you correctly note, requires an assumption about normality. Given that we are looking at Poisson (?) data, there is reason to wonder about the robustness of the test.

In #38 I also provided results from two nonparametric tests: Kendall’s tau and Spearman’s rho. They do not require a distributional assumption. Also, because they are relatively powerful tests even when errors are normal, they are attractive alternatives to Pearson. However, while these tests are robust against mis-specification of the distribution, they are not robust against, for example, “red noise”. SteveM has written a lot on this topic; search CA for “red noise” and “trend”. Among other things, “red noise” can lead to a very high type 1 error rate — you find too many trends in trend-free stochastic processes.

Note that in this case all three tests, as well as an eyeball assessment, find no evidence of trend (the p-values for the 3 tests are .35, .71, and .61, respectively (#38)). I think we can safely conclude that whatever trend there is in the underlying process is small compared to the natural variability.

#56

These non-parametric tests, which will cope with the Poisson variance, can still only detect linear trends. If the relationship between hurricane counts and year is not linear, these tests are suitable, and there will be a high Type-2 error. Consider this code

xEven though there is an obvious relationship between x and y, the linear correlation test fails to find it.

You correctly state that these tests non-parametric are not robust against red noise. If the hurricane counts are autocorrelated, then they are not from an idd Poisson process, and the claim that the mean is constant is incorrect.

The code

x=-10:10;

y=x^2+rnorm(length(x),0,1)

cor.test(x,y)#p=0.959 for my simulation

First, I want to join Willis (#47) in offering “special thanks to Steve M. for providing this statistical wonderland wherein we can discuss these matters.” CA really is an amazing place.

I would also call attention to Willis’s elegant and expressive graphics. Graphics are not a trivial thing; they are a critical component of statistical analyses (for those who disagree, Edward Tufte (author of “The Visual Display of Quantitative Information,” one of the most beautiful books ever written) presents excellent counter-arguments including an utterly convincing analysis of the 1986 Challenger disaster — it resulted from bad graphics!).

WRT #44 – #56, there’s been a lot of comments, almost all of them interesting.

I think bender has it right, but the arguments on both sides deserve careful consideration. The reason for the disagreement, as I see it, has to do with ambiguity about rules for graphical presentation, specifically what is “conventional,” and some confusion about what we are trying to represent with the figure.

There is a also subtlety here that I am not sure I fully understand myself, but here goes: When you plot parameter estimates, error bars mean one thing (some sort of measure of the distance between the estimated value and the true parameter); when you plot data, they mean another (corresponding to the distance between the observed value and that single realization of the process). These two types of uncertainty are recognized, respectively, as epistemic and aleatory uncertainty (aka parameter uncertainty and natural variability). That’s why I asked the question in #41

which Il answered in #48

When looking at a graphic, the clue about which type of uncertainty is presented — i.e. what the error bars refer to — is whether or not we are looking at data or estimates. When estimates are based on the mean of samples of size N=1, as is the case here, there is an obvious problem: The viewer may assume that the plotted points are data. However, you could argue, as some have, that these are not data but estimates based on samples of size N=1. (IMHO, it makes no sense to estimate from samples of size N=1 when you have 63 observations available; but that’s another discussion). Unless care is taken in how the graphic is constructed, the ambiguity is bound to cause confusion.

Oops! Please note that in the last paragraph of #59, the “aleatory/natural variability” is incorrectly defined.

#59 Now that I look at it, the whole section containing the word “aleatory” is a mess. Best just to ignore it. 😉

#51 Bender, sorry to prolong an argument, but

No, I don’t think you are and I’m not sure you have understood what I was arguing based on your response additionally in #53. This is not a case where we have some large population where there is some defined population mean and standard deviation and by repeatedly sampling that population we can determine the mean and standard deviation of the sample and from that determine the whole data set which is what you seem to be arguing when you think that each data point is representative of that mean and should have that same variance. When you have a few random, independent processes like radioactive decay, and I submit, for the storm counts per year, you do not have the situation that you describe.

Have you ever done an experiment where you have small counting statistics like a radioactive decay counting experiment for example? The process is described well in that link that Paul Linsay gave and it additionally describes the standard deviation on each individual data point as root N.

http://nucleus.wpi.edu/Reactor/Labs/R-stat.html

Exactly the same situation applies to photons recorded by a photomultiplier but also to more commonplace situations such as calls to a call centre, admissions to a hospital etc. Each summed count within a time interval gives a number – the mean therefore for that time interval which if the count is N in that time interval, the variance is N and the standard deviation is root N. Each individual observation – each time period in the radioactive counting experiment or each year in the counting storms ‘experiment’ is an individual number subject to counting statistics. Where you have small probabilities of things happening but a large number of trials so that probability x trials = a significant number, you are subject to counting statistics. Then each data point (each year in the storms’ case that started everything off) has its own variance based on the number of storms counted in that year. Here I am making no assumptions about time stationarity or anything else, I am just looking at a sequence of small numbers generated by a process subject to counting statistics. This whole debate started because Paul Linsay put counting statistics confidence limits on the data points in figure 1. These are different for each point and I agree with him, you do not have the same confidence limit on each data point because each one is an independent measurement. If you want to compare them – to look for anomalous values or correlations with time or anything else then we must look at the confidence limits for a particular year, if you wish to test if a particular year is anomalous or we test for changes in the mean if we wish to test if there is some correlation with time.

The mean value for all of the 63 years is 6.1 – suppose a particular year records for example 15. You might want to know what is the probability that that year is anomalous and you would look at the variance of that year which is root 15 and compare it with other years. If you want to compare with neighbouring single years then you would be comparing with the mean and standard variation of each of those individual years, if compared with the remaining 62 years then you would sum up all 62 years and derive a mean value and standard deviation which is root of all the storms in the 62 years.

#62 IL: Please take another look at the article that Paul provided and that you cite. It does not say:

Rather — and this is important — it specifies root M, where M is the true process mean (i.e. the expected value of N), which is not the observed value N.

Since this has become a forum on measurement error, let’s continue. It’s an interesting topic all by itself.

When you make a measurement there are two sources of error. One due to your instrument and the second due to natural fluctuations in the variable that you are measuring. The total error is the quadrature sum of these two.

An an example, consider measuring a current, I. It is subject to a natural fluctuation known as shot noise with a variance proportional to I. I can build a current meter that has an intrinsic error well below shot noise so that the error in any measurement is entirely due to shot noise. Now I take one measurement of the current. It’s value happens to be I_1, hence the assigned error is +-sqrt(I_1) at the one sigma level. The experimental parameters change and a measurement of the current gives I_2, this time with an assigned error of +- sqrt(I_2). And so on. I never take more than one measurement in each situation but no one would argue with my assignment of measurement error. [Maybe bender would, I don’t know!]

Now translate this to the case of the hurricanes. Instrumental error is zero. I can count the number this year perfectly, it’s N. If hurricanes are due to a Poisson process the count has an intrinsic variance of N. Hence the assigned hurricane count error is sqrt(N), exactly what I did in Figure 1.

#47, Willis

The bin heights of the histograms are subject to Poisson statistics too. Hence the errors are +-sqrt(bin height). You have to show that the fluctuations in the distributions are significantly outside these errors to warrant the sine wave. To paraphrase the old joke about the earth being supported by a turtle: It’s Poisson statistics all the way down.

#63. Maybe that wasn’t the best link to work with since it discusses Gaussian profiles and says that Poisson statistics are too difficult to work with! Its only the true process mean M when you have a large number of counts so that a Gaussian is an appropriate statistics to use. It makes the point lower that when you have a single count, then the count becomes the estimate of the mean.

I therefore go back to my point that the appropriate confidence limit on a single year’s storm counts is root N where N is the number of counts in that year. If I had thousands of year’s worth of data, on your argument I would take the standard deviation of the total number of storms which would number (say) 10,000 which the standard deviation would be a 100. Are you going to argue that the appropriate error on each individual year is +/-100?, or a fractional error of 100/10000 = 0.01 (times the mean of 6.1 = confidence limit of 0.061 on each individual data point)? The former is clearly nonsense and the latter is wrong because each year does not ‘know’ that there are thousands of years worth of data. Its like tossing coins, I can toss a coin thousands of times and get a very precise mean value with precisely determined standard deviation but if I toss a coin again, that is not appropriate for working out the probability of what is going to happen next time since the coin only ‘knows’ what the probability is of it coming up a particular result for the next throw! Ditto if I want to look at an individual year in that sequence I have to look at the count I have got for that year.

Please note, in that example of counting for thousands of years and getting 10,000 storms I would be confident that I could determine the mean over all those years with a confidence limit of 1% but the uncertainty in time on that mean value would then span that whole period of thousands of years. If I want to see if there are long term trends in that data I can combine 100 years at a time to reduce the fractionational error in each of the mean values for each of those centuries and compare the mean value for each of those centuries with its standard deviation to test whether there is significant change with time. But then you would plot a single mean value for each century with the confidence limit on the time axis of one century.

#47. Willis, this is rather fun. Off the top of my head, your arc sine graphic reminded me of two situations.

First, in Yule’s paper on spurious correlation, he has a graphic that looks like your sinusoidal graphic.

Second, arc sine distributions occur in an extremely important random walk theorem (Feller). The amount of time that a random walk spends on one side of 0 follows an arc sine distribution. When I googled “arc sine feller”, I turned up a climateaudit discussion here that I’d forgotten about: http://www.climateaudit.org/?p=310 .

So there might be some way of getting to an arc sine contribution without underlying cyclicity (which I am extremely reluctant to hypothesize in these matter.)

49,54 Correction:

lambda/n is the variance of the estimator, we don’t know lambda, but using![\hat{x}/n[\tex] wouldn't be too dangerous I guess(?). Compare to normal distribution case (estimate the mean, known variance sigma2),](https://s0.wp.com/latex.php?latex=%5Chat%7Bx%7D%2Fn%5B%5Ctex%5D++wouldn%27t+be+too+dangerous+I+guess%28%3F%29.+Compare+to+normal+distribution+case+%28estimate+the+mean%2C+known+variance+sigma2%29%2C+&bg=ffffff&fg=000&s=0&c=20201002) latex \hat{x}[\tex] is the MVB estimator of the mean, with variance sigma2/n.

latex \hat{x}[\tex] is the MVB estimator of the mean, with variance sigma2/n.

I find Willis’ point (#47) about eliminating the sinusoidal wave interesting from a statistical perspective, but it also speaks to the underlying climate issues. What is the cause and climatic signficance of the sinusoidal pattern Willis eliminated?

You have a record of 63 years, which in climate terms is virtually nothing. I have long argued that the use of a 30 year ‘normal’ as a statistical requirement is inappropriate for climate studies and weather forecasting. Current forecasting techniques assume the pattern within the ‘official’ record is representative of the entire record over hundreds of years and holds for any period, when this is not the case. It is not even the case when you extend the record out beyond 100 years. The input variables and their relative strengths vary over time so those of influence in one thirty year period are unlikely to be those of another thirty year period.

Climate patterns are made up of a vast array of cyclical inputs from cosmic radiation to geothermal pulses from the magma underlying the crust. In between is the sun as the main source of energy input with many other cycles from the Milankovitch of 100,000 years to the 11 year (9-13 year variability) Hale sunspot cycle and those within the electromagnetic radiation. We could also include the sun’s orbit around the Milky Way and the 250 million year cycle associated with the transit through arms of galactic dust. My point is the 63 year record is a composite of so many cycles both known and unknown that to sort them out in even a cursory way is virtually impossible with current knowledge. Is the 63 year period part of a larger upward or downward cycle, which in turn is part of an even larger upward or downward cycle? Now throw in singular events such as phreatic volcanic eruptions, which can measurably affect global temperatures for up to 10 years and you have a detection of overlappping causes problem of monumental proportions.

Interesting discussion.

#55

Not 100 % compatible, that would be suspicious. And I think if I estimate lambda from observations, and then observe that 0.01 and 0.99 quantiles are exceeded only once with n=60, I think I have made kind of goodness of fit test. I don’t claim that it is optimal test, but at least I did it 😉

#62

Having trouble understanding what you are saying (sorry). In your link it is said that

IMO this is not the case here. TAC seems to agree with me.

#64

Makes no sense to me.

Re: #56

I have to continue going back to this statement and others like it to keep, what I view as the critical result coming out of this discussion, firmly in mind. To a layman with my statistical background I find the discussion about the Poisson distribution (and beyond) interesting and informative, but I also am inclined to view it as cutting the analysis of the data a bit too fine at this point.

I would guess that a chi square goodness of fit test or a kurtosis/skewness test for normality would not eliminate a Poisson and/or a normal distribution as applying here (without the sinusoidal correction). Intuitively, if one considers the TC event as occurring more or less randomly and based on the chance confluence of physical factors, the Poisson probability makes sense to me.

I agree with the Bender view on applicability of statistics and errors (but not necessarily extended to valuations of young NFL QBs) and his demands for error display bars. I have heard the stochastic mingling with physical processes arguments before but I keep going back to: stochastic processes arise from the study of fluctuations in physical systems.

Standard statistical distributions can be helpful in understanding and working with real life events but I am also aware of those fat tails that apply to real life (and maybe the 2005 TC NATL storm season).

68, Tim Ball: great post!

Steve Sadlov’s 2007 prediction : 6.1 +/- 2.449489743 — LOL!

Sorry I meant 6.1 +/- 2.469817807 😉

It would be more interesting to find a 95% confidence interval for any hypothetical trend that can be included/hidden in the noisy data. Do climate models make predictions that are outside this confidence interval?

Paul,

I see John Brignell gave your post a mention at his site.

A little over half way down the page.

Steve M, you say:

I agree whole heartedly. I hate to do it because it assumes facts not in evidence. I’ll take a look at your citation. Basically, what happens is that lambda varies with time. It probably is possible to remove the effects of that without assuming an underlying cycle. Exactly how to do that … unknown.

w.

#65 IL: Thank you for your thoughtful comments.

Believe me: I understand your argument. I am familiar with the statistics of radioactive decay, and I know something about how physicists graph count data. The error bars corresponding to that problem — you describe it well — are designed to serve a specific purpose: To communicate what we know about the parameter lambda. The “root N” error bars (though not optimal (see below)), are often used in this situation, and they are likely OK so long as the product of the arrival rate and the time interval is reasonably large. I have no argument on these points.

So what’s the issue? Well, we’re not dealing with radioactive decay, or with any of the other examples you cite. We’re dealing with statistical time series, and, IMHO, the relevant conventions for plotting such data come from the field of time series analysis, not radioactive decay. Specifically, when you plot a time series with error bars, the error bars are interpreted to indicate uncertainty in the plotted values. That’s what people expect. At least that’s what my cultural background leads me to believe.

[This discussion has a peculiar post-modern feel. Perhaps a sociologist of science can step in and explain what’s going on here?].

Anyway, here are some responses to other comments:

This is approximately correct if the only sample of the population that you have is the N observations and you are concerned with estimating the uncertainty in the arrival rate. If you want a confidence interval for the observed number of arrivals, however, the answer is [N,N]. (Incidentally, the root N formula is actually not a very good estimator of the standard error. For one thing, if you happen to get zero arrivals, you would conclude that the arrival rate was zero with no uncertainty).

That’s a good point. Under the null hypothesis we have one population (of 63 iid Poisson variates). To estimate lambda, just add up all the events and divide by 63.

Then I would plot the data — the 63 observations, no error bars — and, as bender suggests, perhaps overlay the figure with horizontal lines indicating the estimated mean of lambda (imagine a black line), an estimated confidence interval for lambda (blue dashes), and maybe some estimated population quantiles (red dots). However, I would not attach error bars to the fixed observation. You know: The observation is fixed, right? However, the overlay would describe the uncertainty in lambda as well as the estimated population quantiles.

OK. So how would you plot error bars for the time series of coin tosses? Note: Coin tosses can be modelled as a Bernoulli rv, whose variance is given by N*p*(1-p); since N=1, \hat{p} is always equal to either zero or one, and your error bars have length zero…

If it’s random then that means we have no clue at all what causes it. Neatly done Paul.

#77 TAC. Thanks for your comments, particularly the first paragraph seems to indicate that maybe we are not as far apart as I thought. Maybe this is a difference from different areas of science and we are arguing about presentation rather than substance but, to me, Paul’s figure 1 is correct and meaningful. What you say

doesn’t make sense to me because if you have assumed a null hypothesis of no time variation and have summed all the 63 years’ worth of data then we only have one data point, the mean of the whole ensemble, with smaller uncertainty on that ensemble mean but spanning the 63 years. What you suggest is having your cake and eating it by taking the data from the mean and applying that to individual data points.

I can see what you are getting at but in all the fields I have worked in (physics related) what you suggest would be thrown out as misleading. I would never see a data point with no error bar because I always see predictors and even if its a perfect observation of a discrete number of storms, to present that as a perfect number to me lies about the underlying physics. Perhaps ultimately as long as there is good description of what is going on and we calculate confidence limits, significance of anomalous readings and trends correctly then maybe it doesn’t matter too much.

I still think though that the basic problem here between us is when you say

No, physically this is exactly like radioactive decay or finding the pennies that I described or admissions to a hospital or any of those similar situations where counting statistics applies – the underlying physics is the same where we have a very small probability of a storm arising in a particular time or place but over a year there are a few. It is not then a statistical time series where I am sampling from a larger population with some underlying mean and variance.

I guess as I say, as long as we correctly calculate the significance of time variations etc and its well explained what is done or displayed then this discussion has probably gone about as far as it can.

My final 2p on all of this. To me Paul’s figure 1 conveys correctly the uncertainties inherent in the physics, what you and Bender suggest to me with my background is misleading and what Judith Curry presented (way back in the othe thread that started all of this with the 11 year moving average) is wrong and dangerously misleading.

Paul:

1) What if the count for some year is zero (TAC’s point in 77)?

2) How would you draw those bars if you assume Gaussian distribution instead of Poisson?

I think I understand now (pl. correct if I’m wrong!). You observe y(t), y(t)=x(t)+n(t), n(t) is error due to instrument. x(t) is a stochastic process. x(t) varies over time, and you are not very interested of x(t) per se, you want to get more general estimate: what is x(t+T), x(x-T), etc. If the process is stationary, it has an expected value. Your second error is E(x)-x(t), am I right? If so,

1)I think that ‘error’ is misleading term

2) ‘natural fluctuations’ without explanation opens the gate for 9-year averages and Ritson’s coefficients.

If you define it as stochastic process, you’ll have many tools that are not ad hoc (Kalman filter, for example), to deal with the problem.

Often ad hoc methods are as effective as carefully defined statistical procedures, but the difference is that the latter gives less degrees of freedom for the researcher. If you have 2^16 options to manipulate your data, you’ll get any result you want from any data set. Popper wouldn’t like that.

#79 IL: I agree that our difference has to do almost entirely with form, not substance, and even there I agree we’re not far apart. When you say:

My only quibble would be that the physics are different; its the stats that are the same; and the “cultural context” — the graphical conventions employed by the target audience — differ.

So, the remaining issue: How to communicate the message, which we agree on, as unambiguously as possible to the community we want to reach.

As I understand it, you are comfortable with — prefer — error bars attached to original data; I worry that such error bars introduce ambiguity to the figure (I also question their statistical interpretation, but that’s a secondary issue). I prefer an overlay or separate graphics.

Of course, we do not have to resolve this. But, having now debated this thorny issue for half a week, perhaps this could be a real contribution to the literature. Consistency and rigor in graphics is important — perhaps as important as consistency and rigor in statistics, though less appreciated.

Perhaps we could come up with a whole new graphical method for plotting Poisson time series — get Willis involved to ensure the aesthetics, and other CA regulars who wanted to get involved could contribute — and share it with the world ;-).

I say we name it after SteveM!

Time to get some coffee…

#79 IL: One final point:

I don’t know if I should admit this, but, in the sense you describe, statisticians do “have their cake and eat it too” — it is standard practice in time series analysis. For example, one often begins a data analysis by testing the distribution of errors assuming the sample is iid — before settling on a time series model. Then one looks at possible time-series models, rechecking the distribution of model errors based on the hypothesized model, etc., etc. It’s called model building. Perhaps it is indiscrete of me to mention this…

It does raise a question: How would you develop error bars for a non-trivial ARMA — let’s start with an AR(1) — time series with Poisson errors?

Don’t know about a coffee TAC, perhaps we could have a beer or two….

I’m not really trying to get in the last word, but I think the physics IS the same.

OK, in a literal sense, radioactivity is due to quantum fluctuations and tunnelling and hurricanes are a macroscopic physical process but what is fundamental to the problem and why I think that what you and Bender suggest is inappropriate is that there is a very small probability of hurricanes arising in any given area at any given time, its only when we integrate over a large area – ocean basin and long time (year) that we find up to several hurricanes. Each – on the treatment above – is a random, independent event caused by a low probability process which is why the statistics and the underlying physics of that statistics are the same as these other areas of physics.

Getting climate scientists like Judith Curry to discuss that inherent uncertainty in years’ counts would be really interesting. Having said that, and this is where it could get really interesting, as Margo pointed out some time back, there is a possibility that hurricane formation is not independent, that the more hurricanes there are in a year, the more predisposed the system is to form more through understandable physical mechanisms. That would take us to a new level of interest but since I see conclusions on increasing hurricane intensity based on 11 year moving averages with no apparent discussion of the inherent uncertainties in a probability system like this, I think there is a long way to go before we can tackle such questions.

#80, UC

(1) sqrt(0) = 0, no error bars, just a data point

(2) once N is large enough, about 10 to 20, the difference between Poisson and Gaussian becomes small. The Gaussian has mean N and variance N. The error bars would still be +- sqrt(N) at one sigma.

Ritson used to be an experimental particle physicist, my training and career for a while too, so I’d expect that he would understand the way I plotted the data and error bars.

The problem with climate time-series data like these hurricane data is that you have one instance, one realization, one sample, drawn from a large ensemble of possible realizations of a stochastic process. You want to make inferences about the ensemble (i.e. all those series that could be produced by the terawatt heat engine), but based on a single stochastic realization.

Any climate scientist who does not understand this – and its statistical implications – should have their degree(s) revoked.

In contrast, physical time-series data that are generated by a highly deterministic process do not face the same statistical challenge. Often the physical process is so deterministic that you never stopped to think about the existence of an ensemble. Why would you?

Hey people, off topic I know, but seeing what good work you amateurs are doing, I can’t resist citing this little gem, which seems taken directly from RealClimate:

This was from G. G. Simpson, talking about proponents of Continental drift in 1943….

(quoted in Drifting Continents and Shifting Theories, by H.E. LeGrand, p. 102)

I got lost. Did you guys agree whether “error bars” should be put on the count data?

#87

No, didn’t agree. But I’ll try to find the book suggested in #11 and learn (found William Price, Nuclear Radiation Detection 1964, will that do? )

1) and 2) in #84 makes no sense to me (*), but I’m here to learn. I agree with #85.

(*) except ‘difference between Poisson and Gaussian becomes small’, but replace 10 to 20 with 1000

#87 No, I guess not. But there is no way I am wrong – or my name isn’t Michael Mann

🙂